Jaguar is the distributed version of ArrayDB. Simply installing ArrayDB over any distributed file system such as Gluster and Ceph, ArrayDB performed extremely well.

We have setup a cluster of computer servers running Glusterfs, which is a distributed file system capable of scaling to several petabytes and handling thousands of clients. We then mounted the Gluster volume on the clustered servers. Spark 1.3.1 is also installed on these same servers to benchmark SQL operations on a data set consisting of two million key-value pairs.

In Spark testing, the procedure to compile and execute Spark Scala program is as follows:

$ vim MyTest.scala

$ sbt package

$ spark-submit –class MyTest –master yarn-client target/scala-2.11/mytest_2.11-1.0.jar

In Jaguar testing, the data directory in $HOME/arraydb/ is soft-linked to the mounted directory of gluster volume. Then client programs are started on the different clustered hosts.

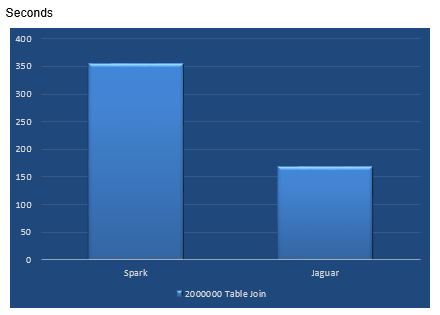

1. Joining two tables each consisting of 2000000 data items (32 bytes key, 48 bytes value).

MyTest.scala:

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.SparkContext._

import org.apache.spark.sql._

import org.apache.spark.sql.types.{StructType,StructField,StringType};

object MyTest

{

def main(args: Array[String])

{

val sparkConf = new SparkConf().setAppName(“MyTest”);

val sc = new SparkContext(sparkConf)

val sqlContext = new org.apache.spark.sql.SQLContext(sc)

val people1 = sc.textFile(“hdfs://HD3:9000/home/exeray/2M.txt”)

val people2 = sc.textFile(“hdfs://HD3:9000/home/exeray/2M2.txt”)

val schemaString = “uid v1 v2 v3″;

val schema = StructType ( schemaString.split(” “).map( fieldName => StructField( fieldName, StringType, true ) ) )

val rowRDD1 = people1.map( _.split(“,”)).map( p=>Row( p(0), p(1), p(2), p(3) ) )

val rowRDD2 = people2.map( _.split(“,”)).map( p=>Row( p(0), p(1), p(2), p(3) ) )

val peopleSchemaRDD1 = sqlContext.applySchema( rowRDD1, schema )

val peopleSchemaRDD2 = sqlContext.applySchema( rowRDD2, schema )

peopleSchemaRDD1.registerTempTable( “people1” );

peopleSchemaRDD2.registerTempTable( “people2” );

val res = sqlContext.sql(“SELECT * FROM people1 join people2 on people1.uid=people2.uid “)

res.collect().foreach(println)

}

}

Jaguar:

adb> select * join ( 2M, 2M2 );

Result: Spark took 356 seconds, Jaguar took 168 seconds.

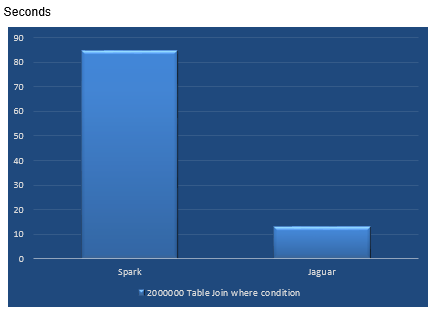

2. Joining two tables with condition

The Spark Scala program has added a where clause:

val res = sqlContext.sql(“SELECT * FROM people1 join people2 on people1.uid=people2.uid where people1.uid >= ‘aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa’ and people1.uid <= ‘gggggggggggggggggggggggggggggggg’ “)

So does Jaguar:

adb> select * join ( 2M, 2M2) where 2M.uid >= ‘aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa’ and 2M.uid <= ‘gggggggggggggggggggggggggggggggg’;

Result: Spark took 85 seconds, Jaguar took 13 seconds.

3. Count items by keys

Spark: SELECT count(*) FROM people1 where uid >= ‘kkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkk’ and uid <= ‘mmmmmmmmmmmmmmmmmmmmmmmmmmmmmmmm’ “)

Jaguar: select count(*) from 2M2 where uid >= ‘kkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkk’ and uid <= ‘mmmmmmmmmmmmmmmmmmmmmmmmmmmmmmmm’ limit 999999999;

Result: Spark took 52 seconds, Jaguar took 0.1 seconds.

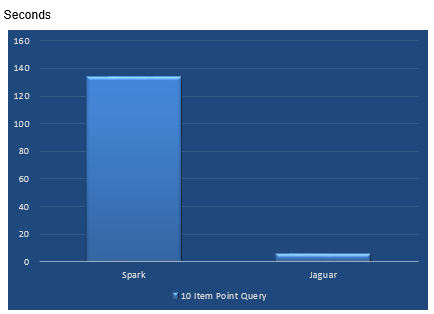

4. Point queries

Spark:

val res1 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘yGW4r5thqpu7Bb4TCmxtdTpxXTxcOjhk’ “)

res1.collect().foreach(println)

val res2 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘lZ1wt3llixT0r5jujuwfcKYb0Og2JF05’ “)

res2.collect().foreach(println)

val res3 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘YKyoRLuBuYBGTpmQauGgnPZg3FGI3GxZ’ “)

res3.collect().foreach(println)

val res4 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘YOzKDhmtCN095BVtyJRESRjhamhbJD1H’ “)

res4.collect().foreach(println)

val res5 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘w0zDgzD2BdWE5sgFxgEL6zBjZckY6mnA’ “)

res5.collect().foreach(println)

val res6 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘HhB6p8srwRG4PpHCgT1IG1jKJU0PXDJE’ “)

res6.collect().foreach(println)

val res7 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘4Fpqf8JLORNavhwnthF7olySkAk0ggOj’ “)

res7.collect().foreach(println)

val res8 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘tvApMnTzxc8SCkyRiSnTWtIYUHJQc91E’ “)

res8.collect().foreach(println)

val res9 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘1Sg72G7ubanKSiYkzOqaGf9VvQjIVDLV’ “)

res9.collect().foreach(println)

val res10 = sqlContext.sql(“SELECT count(*) FROM people1 where uid = ‘omo64Q5VxjzhDs148tNzrW4sGk4ouASS’ “)

res10.collect().foreach(println)

Jaguar:

select * from 2M where uid=yGW4r5thqpu7Bb4TCmxtdTpxXTxcOjhk;

select * from 2M where uid=lZ1wt3llixT0r5jujuwfcKYb0Og2JF05;

select * from 2M where uid=YKyoRLuBuYBGTpmQauGgnPZg3FGI3GxZ;

select * from 2M where uid=YOzKDhmtCN095BVtyJRESRjhamhbJD1H;

select * from 2M where uid=w0zDgzD2BdWE5sgFxgEL6zBjZckY6mnA;

select * from 2M where uid=HhB6p8srwRG4PpHCgT1IG1jKJU0PXDJE;

select * from 2M where uid=4Fpqf8JLORNavhwnthF7olySkAk0ggOj;

select * from 2M where uid=tvApMnTzxc8SCkyRiSnTWtIYUHJQc91E;

select * from 2M where uid=1Sg72G7ubanKSiYkzOqaGf9VvQjIVDLV;

select * from 2M where uid=omo64Q5VxjzhDs148tNzrW4sGk4ouASS;

Result: Spark took 134 seconds, Jaguar took 0.7 seconds.

Conclusion: For conditional queries, especially when indexes are used, Jaguar performs much faster than Spark.